Building a predictive lead scoring system (part 1)

Components and the process behind building a predictive solution

by Miro Maraz

March, 2018

Predictive Analytics, Lead Scoring

Recruitment / Talent Agency

Machine Learning

Succesful organizations are increasingly turning to predictive analytics to drive their bottom line and establish a competitive advantage. Successfully building and adopting a predictive system is a non-trivial undertaking that requires dedicated expertise. However, understanding the fundamentals of such a system, how it works and the process behind it, is key for every decision-maker involved, as the successful adoption of such a system rests on an effective pairing of the technology and business sides of the house. This is the first post in a series where we are going to explore the building blocks of such predictive system, using predictive lead scoring in the recruitment space as our example.

What is Predictive analytics

Generally, analytics is the discovery and communication of meaningful patterns in data. Predictive analytics then focuses specifically on patterns that let us understand and anticipate future events. The goal is to extract signals buried inside the data that drive outcomes and provide means to apply these signals to future events. This is essentially the training and scoring process. By automating this process and delivering relevant insights to end-users, managers or applications, predictive solutions empower business users to make fast, informed, consistent decision and enhance their intuition. In practical terms, best outcomes are achieved exactly by this sort of tight coupling between human subject matter expertise and effective data-driven assessment (scores) based on years of relevant historical events.

Building predictive models

At the core of any intelligent decision support system are predictive models. We can think of these models as simplified representations of reality. They are the results of describing historical events using data, algorithms learning complex patterns from this data, and using what they learn to estimate/predict outcomes for future events. The path to building a predictive model and generating predictions typically follows some variation of a well-defined cycle:

Use Case: Lead Scoring for Staffing Agency

As we walk through the steps of this process, we’ll be reflecting on a solution we developed in this way for practical insights. Our business context is medium to large staffing agencies, organizations that work to fill hundreds and thousands of new job requisitions per month. What we would like to enable these organizations to do is understand the value of a job requisition the moment it is submitted to them, this way they can optimize which jobs they should spend their efforts filling. This is important because resources are limited, and sourcing candidates for these positions is very time-consuming so how the work is prioritized is key to optimizing the placement rate and revenue.

1. PROBLEM DEFINITION

Step one is always defining a problem – a use case. It’s the thing we would like to explain or know ahead of time. This is a critical step, one that needs involvement from business users, decision-makers and domain experts working together with data scientist and engineers. It is ultimately the business that must hone in on the key piece of information that would give them a valuable advantage and the engineers and scientists who must outline a practical path to get there.

In our case, we are trying to understand and predict the value of a new job requisition. The business hypothesis is that if we can understand likelihood of placement, likelihood of revenue, revenue potential $, hourly rate or even risk of losing to competition ahead of time we can more effectively prioritize, focus efforts on the best opportunities and close more leads faster. The system should make these predictions in real-time as the volume of incoming requisitions is significant. Finally, we would also like to broadly understand what drives both positive and negative outcomes for strategic purposes.

2. DATA EXPLORATION

Building effective models requires a lot of relevant data. Data typically comes from the various systems which are sometimes wildly different – this step focuses on understanding what data is at our disposal. We want to identify what is potentially useful, or problematic, and organize into domains or groups sorted by relevance or potential.

For effective lead scoring of job requisitions, we need to find data that describes job requisitions our organization handled in the past as well as their outcomes. That is, “here is what we knew about that job requisition at the time, and here is what happened”. Part of this process is developing attributes that let us effectively describe what is known with data. Things like what kind of a position the job was for, what kind of organization, who worked on it, how much effort was spent, how much revenue did it bring, etc. We can also look for outside sources to round out the information with things like the strength of the candidate pool, state of the job market or economy.

3. DATA SELECTION & PREPARATION

Once we’ve outlined which data is available and potentially useful, the next step is to select all the best data to begin working with it. Unfortunately, data found in most systems is not fit to be used directly to build predictive models. We can’t just feed the model some tables from our CRM system or export a data feed from the ERP system. This is reflective of the way in which, in some sense, predictive algorithms are dumb. They need for all the relevant data to be lined up and structured for them just so, and then they become incredibly smart and powerful. And so the data needs to be mapped, merged, transformed and cleaned in order to be useful. It also must be flattened out to a single dataset so each observation (the thing we are trying to learn about) is one row and each column is an attribute that describes the observation. This is one of the most challenging parts of this process and it is typically done iteratively alongside the next 2 steps.

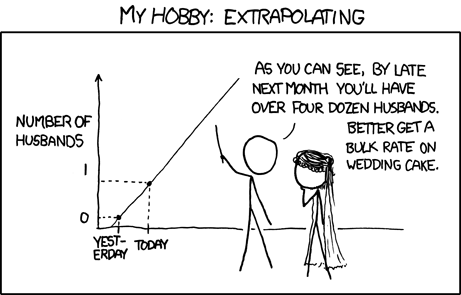

Why managing scope and understanding complexity is key (xkcd)

As the data munging is a complicated and thus expensive process, we are always looking for efficiencies in this step. In our case it is likely that we can produce a base dataset that describes the job requisitions in a way that can be reused for multiple models. Think of it as a base from which we will determine the specific factors that drive placement success, hourly rate, profit percentage, etc. Chances are many drivers are similar and so focusing on fundamental information makes sense. Also starting with data that is vetted, readily available and simple to encode is a good start, leaving the most complicated sources for later iterations. A common mistake made at this stage is to spend massive amounts of time trying to extract a very specific or complicated piece of information, for example if we wanted to calculate an “engagement score” for every account manager working our requisitions based on their communication that’s a very complex metric that we do not yet know if it would be useful. The goal should be to get to a baseline model as soon as possible, we may find that the model performs just fine without super complex attributes. There are two good rules of thumb here:

1. Your first development model should be simple and probably bad, but built very fast

2. Your first deployed model should be just good enough to help, and no more

In each case, the cost-benefit of the things you will learn from having a working model far outweigh the effort spent working speculatively on data issues, a process that can literally take forever if you allow it. There will always be more data to add, but our goal is practical: we need to make an impact.

4. MODEL TRAINING & VALIDATION

The goal of this step is to produce series of models that will meet the expectations of the business and the technical requirements of the analytics experts. Here is the data is further refined, new derived variables are created and thousands of models are built, tuned and tested to find the few that meet our expectations.

It’s a balancing act between more complex models (number of facts, type of algorithms) that are harder to explain and simpler models that might be good enough and perform faster. It’s about producing a model that both captures the historical facts well, but it also generalized well enough to be able to produce meaningful prediction in the future. This step is certainly a mix of art and science. It takes both a structured process and applied model development experience to know what to try and how, how to validate your assumptions and also critically – when to stop.

This is a great place to narrow down the scope and refine our approach. We will most likely end up with some models that perform well and some that don’t. Maybe we are pretty confident about predicting the likelihood of a successful placement but our prediction of hourly rate is less trustworthy. This is a place to discuss findings and performance with the business folks, share examples and get their input. It almost expected to not be able to predict every given outcome, predictive modeling is not magic. If the data does not contain the driving signal – the key information that is needed to predict an outcome, the model will not perform well. Performance is also a sliding scale however, this is not about being right every single time. It is about being consistent and better than average intuition or existing process. That’s exactly the value: how much would this model be an improvement over the status quo?

5. MODEL EVALUATION & DEPLOYMENT

If multiple viable models could be produced the next step is to decide which model or models will be deployed to production. We can pick a champion model or use multiple models for a more diversified score. There are even techniques to stack multiple models together or produce a sort of consensus. Generally, the approach is to select a technique that performs the best in validation testing, but there may be other concerns. Once a decision has been made the models are deployed into a scoring engine that wraps the model with some interface (API) so we can interact with it. This means that we can use new data (job requisitions, leads) and generate scores using the deployed models. This is typically performed in batch or real time, a solid scoring engine supports both modes of operation for the ultimate flexibility.

In our case one of the key requirements is that we want to be able to pass in the job requisition in its native JSON format to make the system most usable, this means that the scoring engine here needs to perform an additional transformation step to take new requisition records and convert them into a format the models expect, if we decided to use external sources like BLS or Search data this information needs to be added at scoring-time as well. Finally, once we have the scoring engine up we can do a true validation test that not only scores a set of records withheld from the models until now but ones we know outcomes for, but also validate our scoring-time transformation and data enrichment process. All these pieces must work together, the best scoring model in the world is useless if the record it is trying to score has incomplete or wrong information encoded.

6. MONITORING & PERFORMANCE ANALYTICS

Monitoring and performance analytics is a natural extension to a predictive solution. Once we start generating predictions, it is crucial to understand how the system performs, monitor for anomalies and possible degradation. Analytics can help us understand areas for improvement, see trends in our scores and track key performance indicators over time so we have solid information to provide both performance and ROI facts to the business.

In the best case scenario, the analytics are part of the solution. It is not a requirement as you can bolt any third party offering, but it is nice to have an interface, maybe a dashboard or two and some visualizations to share with the business. It also increases the confidence in the system if these are available to decision-makers themselves so they understand the fluctuations and can react and alert the data scientists if something does not look right.

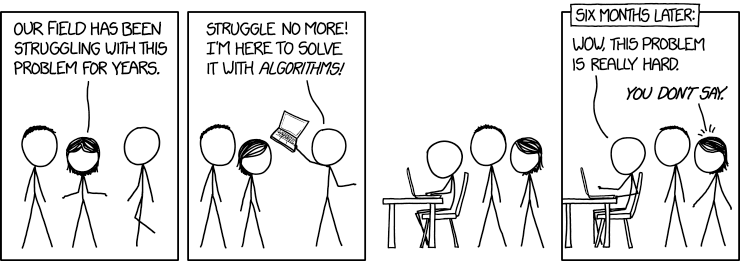

Why a predictive system is more than Machine Learning (xkcd)

7. MAINTENANCE, FINE-TUNING, UPDATE & SUPPORT

The work of predictive analytics essentially never ends. As the new data accumulates and changes over time we need to worry about model maintenance – essentially a periodic model refresh in order to capture the newest trends and patterns. We can use all that we learned during the process so far and generate new models on daily, weekly or any other frequency. This all depends on the use case and how the rapidly actual data changes. We have to be prepared to support our business users in case they have any questions; diagnose and explain anomalies (never before seen example). There is also the work of maintaining and keeping the solution patched up and updated and supported so it’s stable, secure and performing to expectations.

For our job requisitions solution we employ several analytics perspectives to track the system. One dashboard gives us a perspective on historical outcomes and how they are trending, allowing us to pick up any changes to the disposition of the business or the data the models are learning from. We also have a dashboard that captures the scores being generated by the system, their distribution and how they correspond with actual outcomes. Finally, when refreshing the models in quarterly intervals we produce a certification report that delineates the progress of the model, how well it’s learning and driving factors so that we can track the evolution and ensure the system performs better and better over time.

We also use containerization for the entire solution, this allows us to deploy cleanly and quickly into any context which helps tremendously with overall system utility and flexibility.

I hope this post gave you a good overview of the predictive analytics process, and some practical hints for how to think an implementation. In the next post, I will focus on the functional components of a predictive solution like this and outline the architecture of a complete solution.

If you are interested in finding more about this application of predictive lead scoring in recruitment – check out the product page for ReQue: Predictive Lead Scoring for Staffing Agencies.

In the meantime feel free to reach out via email or on social media, if you have any thoughts or questions, we’re always curious to hear about real-world predictive analytics challenges folks are working on.

Want to score your job requsition leads?

We make this lead scoring process available via a platform called ReQue, you can learn more about it here.

If you're interested in how ReQue can help you, we'd love to talk to you - please schedule a brief call below.

Untitled Research

Intelligent data applications for business.

@2017 Untitled Research, LLC. - All Rights Reserved

Contact Us

info@untitled-research.com

+1 803 610 1127

Philadelphia, PA, USA

Follow Us